Resurrect3D

Resurrect3D is an online, educational platform for visualizing, annotating, and displaying difficult-to -study objects.

From anatomy to architecture, paintings to sculpture, mechanical devices to astronomical bodies, Resurrect3D leverages advances in imaging technologies – multispectral imaging, computer tomography, radiography, MRI, sonography, etc. – to see with new eyes and interact with objects with a sensory plenitude that profoundly enhances our ability to understand and learn.

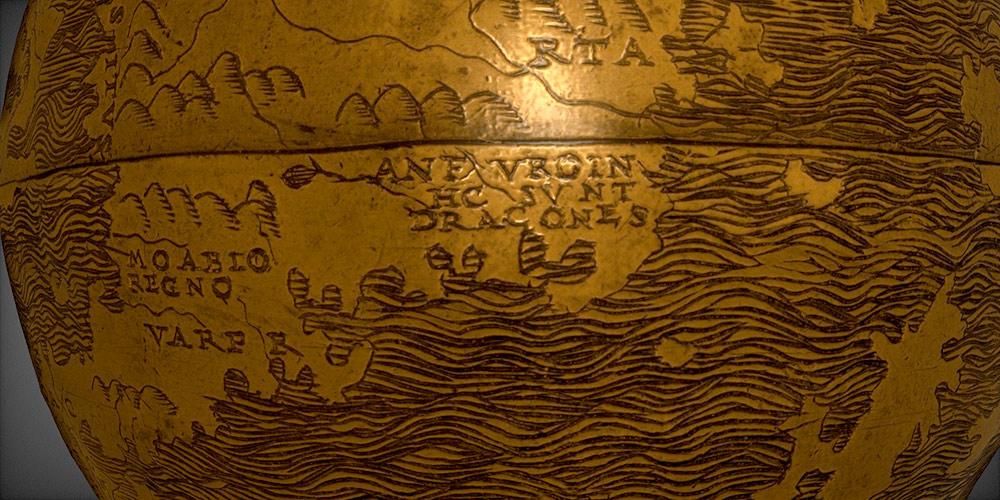

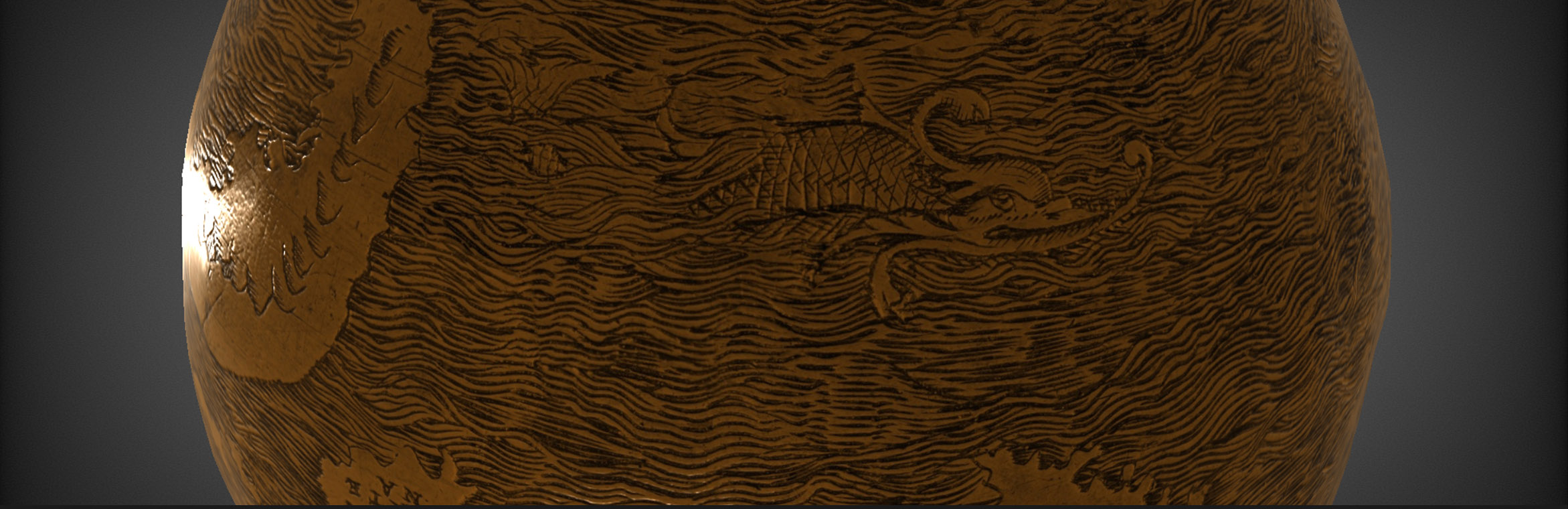

The beta version of Resurrect3D focuses on damaged cultural heritage objects – manuscripts, maps, globes, paintings – that have been imaged multispectrally. While the image capture technologies reveal hidden or illegible elements of the objects, Resurrect3D allows the viewer to explore these formerly invisible perspectives via individual spectral bands in a 3D digital environment using virtual-, augmented-, and mixed-reality goggles. Optimized for the mixed reality Magic Leap, these digital surrogates can be manipulated by the viewer – rotated, zoomed, entered into– from within the digital environment using gestures and movement. Surrogates are further enhanced by adding metadata – information panels, audio, images, video – that again can be launched by the viewer from within the digital environment, creating an experiential microcosm not unlike the viewing interface in the movie Minority Report.

A crucial feature of Resurrect3D is Deep Lecture, effectively a three-dimensional immersive Powerpoint. In this mode, a speaker using 3D goggles addresses a similarly equipped audience both physically present and online, exploring one or several related objects. Exploiting the nodal architecture of Sockets in C++, Resurrect3D enables everything that the speaker sees and launches to to be mirrored, along with the audio feed, to the goggles of the audience. Given an audience that is online as well as local, every deep lecture will be capable of reaching thousands.